It underscores the harm that these disinformation campaigns play in people’s overall life and their health: avoidance of perfectly safe foods, increased health anxiety, impacts to health outcomes, and more.

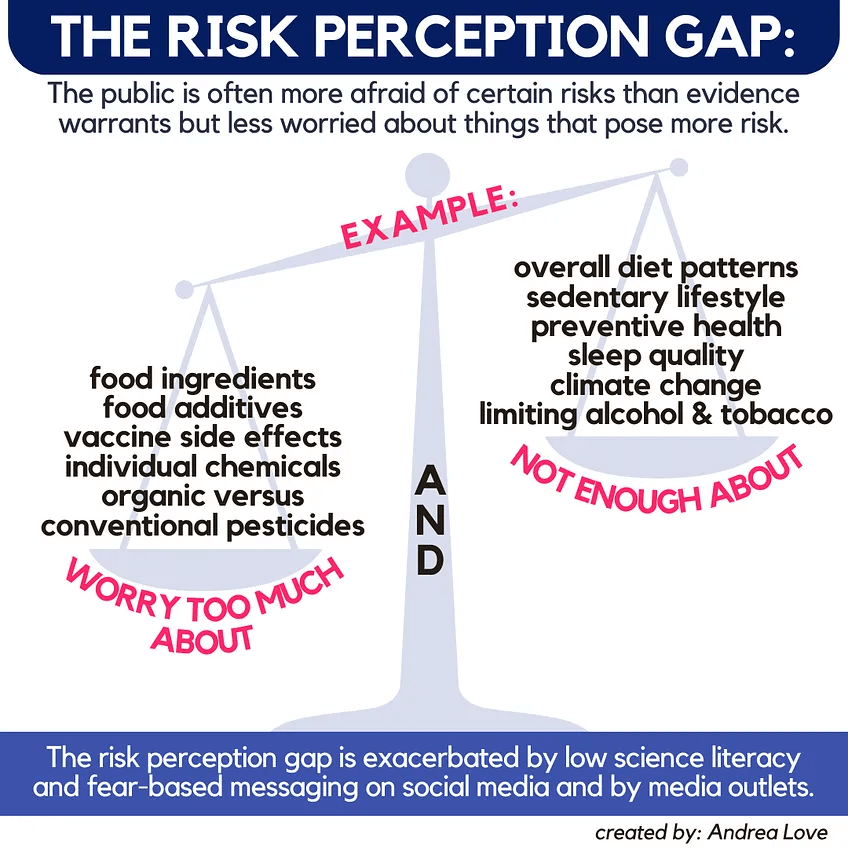

Are we worrying too much about things that pose a low risk and not worried enough about things that pose the greater risk?

These unfounded worries are a result of the risk perception gap. The “risk perception gap” is the disparity between real and believed risks.

It is a psychological phenomenon where fear or concern about specific risks strays from evidence, scientific consensus, and probability of that risk actually occurring.

The gap is particularly pronounced in the fields of health, science, and public health, where complex information and uncertainty can mean people don’t necessarily trust the interpretations of data by certain experts, and instead lead to alternative interpretations of data by other members of the public, social media influencers, and misinformation organizations.

There are substantial harms to this gap, not limited to the illustration above, where people have been legitimately afraid to feed their children safe and nutritious foods. The harms of this gap are multifaceted and impact both individual decision-making and broader public policy (California AB418 and banning safe food ingredients, anyone?) based on misunderstandings of what endangers us versus what does not.

The work of the Environmental Working Group on the whole is a long-standing example of this. Their fear-laden claims of chemicals, whether it is food ingredients, pesticides herbicides, etc., are exaggerated far beyond what these trace levels of substances would ever pose to us. Simultaneously, they create fear about conventional food products and drive people to organic products, which are, on average, 50% more expensive than their conventional counterparts, and offer zero health, nutritional, ecological, environmental, or farm worker benefits. And, contrary to widely held beliefs, organic products are not grown without pesticides:

For more on organic products and organic farming, tune into the two-part podcast:

People are not good at assessing risk. When we encounter statements that evoke emotions, especially negative emotions like anxiety our fear, our ‘logical brain’ is overridden by the primitive emotional brain. This is exacerbated by clickbait media coverage, social media echo chambers, and low science literacy.

As a result, people often have excessive worry about things that pose almost zero risk to us, like:

- Individual food ingredients

- Trace levels of pesticides, herbicides, plant growth retardant chemicals in foods:

- Food additives

- Vaccine side effects or adverse events

- Vaccine ingredients

- Organic food versus conventional food

But at the same time, aren’t worrying enough about things that have a far greater impact or risk to us, like:

- Social determinants of health

- An overall diverse diet

- Preventive healthcare

- Staying up-to-date on vaccines

- A sedentary lifestyle

- Good sleep habits

- Alcohol and tobacco consumption

- Climate change

As a result, a single study in animal models can be blown wildly out of proportion, while every day, maybe less ‘sexy’ but larger threats receive less attention than they should because they are not ‘newsworthy’. The risk perception gap is especially harmful when it comes to science and health topics.

Vaccine hesitancy and anti-vaccine rhetoric

One of the most prominent examples of the risk perception gap is with vaccine hesitancy, where the perceived risk of vaccine side effects or the impact of vaccine ingredients is often much higher among the public than what is supported by scientific evidence.

In contrast, the benefits of vaccination and their ability to prevent deadly diseases (remember, measles is back) are underestimated. That is true with nearly all vaccines nowadays, with many people diminishing the substantial positive impact that vaccines have on individual and collective health (I discussed the harm of prominent figures, like Andrew Huberman, doing this here). As a result of this gap, vaccination rates for preventable illnesses are declining, and increased outbreaks of diseases that were previously eliminated or well-controlled are rising.

Food safety

Conversely, risks associated with trace levels of chemicals used to grow crops are vastly exaggerated. This creates fear around more affordable conventional food items based on misinformation and misunderstanding of chemistry and toxicology when there is no credible scientific evidence to support these claims.

Remember, chemical isn’t a bad word, everything is chemicals. You’re a sack of chemicals. The dose makes the poison with literally everything.

This leads to reduced consumption of demonstrably safe and nutritious foods like fresh produce, especially among lower-income families. This actually poses the greater risk, as we know most Americans don’t consume enough fiber, which is critical for health in a variety of ways: gastrointestinal health, cardiovascular health, immune system function, reduced risk of certain cancers, even reduced risk of all-cause mortality.

Overestimation of rare risks and underestimation of common risks

People often overestimate the risk of rare but dramatic newsworthy medical issues (like Ebola, “mad Cow disease”, or shark attacks) while underestimating the risks of more common, yet less sensational, health issues (heart disease, diabetes, type 2 diabetes).

This perception gap can lead to misallocation of resources, both personally and in public health policies. For example, individuals may fixate or focus on unlikely health issues and spend their money on ineffective or potentially dangerous interventions instead of evidence-based measures like well visits, routine vaccinations, a gym membership, etc. In policy, we see this with regard to disproportionate funding towards less common diseases or even cutting funding from important public health initiatives (yes, our legislators are a key player in this, and they are most definitely not immune to personal biases and risk perception gaps).

It also leads to a lack of awareness on the ‘big picture’ of health, including overall lifestyle patterns and equitable access to healthcare, preventive health screenings, routine vaccinations, and affordable medications, while focusing on things that don’t actually benefit health like unneccessary and unregulated supplements and ‘health hacks’ that cater to privileged and wealthy populations.

Misunderstanding of scientific processes and results

Low science literacy leads to the public often misunderstanding the nature of scientific uncertainty and the process of scientific discovery. For example, in vitro or animal studies are frequently used as ‘evidence’ to refute most robust human clinical and epidemiological evidence, which is not appropriate.

We saw this during the COVID-19 pandemic when changing public health guidance as we learned more about an entirely novel virus led to confusion and mistrust among the public. Instead of an understanding that scientific data can alter our interpretation, it was perceived as “flip-flopping”, and as a result, many people stopped listening to expert recommendations that could have saved lives.

This gap hinders effective communication of scientific findings, but more than that, it is exploited to foster rejection of science and disbelief about scientific consensus.

Bothsidesism (false balance)

Bothsidesism is when opposing views are represented as being more equivalent than what credible scientific evidence demonstrates, ultimately because of misrepresentation of the scientific consensus. The presentation of two opposing views as equally valid distorts public understanding and exacerbates the risk perception gap.

Vaccines

The overwhelming majority of scientific research supports the safety and efficacy of vaccines. However, the public’s perception of vaccine safety varies significantly, often influenced by misinformation and sensationalized media coverage of anti-vaccine arguments. Media outlets often present anti-vaccine viewpoints alongside scientific consensus, giving undue weight to a fringe perspective. This false balance can lead the public to believe that there is significant scientific disagreement about vaccine safety when there is none. The risk perception gap in vaccines can lead to lower vaccination rates, resulting in outbreaks of preventable diseases, public health crises, and loss of herd immunity. For example, while only 52 physicians were behind anti-COVID-19 vaccine rhetoric, public perception was that it was much more of a a ‘split’ take.

Climate change

There is a broad consensus among scientists about the reality and urgency of climate change, largely driven by human activities. However, public opinion on the issue is more divided, often influenced by political and ideological beliefs. The media’s portrayal of climate change often includes voices that deny or downplay its severity, presenting these views as equally valid to the scientific consensus. This false equivalence can create a perception that the scientific community is divided on the issue, which is not the case. Misrepresenting the scientific consensus on climate change can delay critical policy actions and public support for measures needed to mitigate and adapt to climate impacts, exacerbating environmental and economic risks.

General implications of Both Sides-ism

Continuous exposure to conflicting views, especially when one side is not supported by evidence, erodes public trust in scientific expertise and institutions. The perceived lack of consensus can lead to policy inaction or ineffective policy responses, especially in areas where swift action is crucial. Issues like vaccines and climate change become entrenched in political and ideological identities, making rational discourse and consensus-building more challenging.

Bothsidesism significantly harms public understanding of science, leads to poor health and environmental outcomes, and undermines effective policymaking. It’s crucial for media and public communication strategies to accurately represent the weight of scientific evidence to bridge this gap.

Risk perception gap has many potential harms

The distorted perception of real versus believed risks posed obvious harm.

- Misguided Personal Choices: Individuals may make poor health choices based on inaccurate risk perceptions, such as neglecting preventive measures or engaging in risky behaviors.

- Policy and Resource Misallocation: Governments and health organizations may allocate resources inefficiently, focusing on less impactful but more sensational risks.

- Erosion of Trust in Science and Public Health Institutions: When public perceptions do not align with scientific evidence, it can erode trust in experts and institutions, leading to resistance against public health measures. This makes it much more difficult to get buy-in for a variety of interventions that have data to support them, from routine vaccinations to overall lifestyle habits, to voting for specific policies during elections.

- Increased Health Disparities: Groups with limited access to accurate information may be particularly affected by the risk perception gap, exacerbating health disparities. For example, in the context of food misinformation and risk perception gap, individuals with lower socioeconomic status are more likely to buy and eat fewer fruits and vegetables when they encounter false messaging about the harms of pesticides, which poses are FAR greater risk than the essentially non-existent risk of trace pesticide residues.

- Delayed Response to Emerging Threats: Underestimating certain risks leads to delayed responses to emerging health threats, worsening public health outcomes. This is especially the case with issues such as climate change, when decisions and policies made now will only be measurable years down the line.

Science literacy is critical, so that people can understand the difference between anecdotes and robust evidence, and more appropriately evaluate the information they encounter. All of us can take steps to enable this so that our society on the whole is better equipped to navigate the deluge of fear-based messaging that only serves to hinder scientific progress and cause harm.

Dr. Andrea Love, a microbiologist and immunologist, is a public health consultant and science communicator. Follow her at ImmunoLogic and read her articles on Substack. Find Andrea on X @dr_andrealove

A version of this article was originally posted at Dr. Andrea Love’s blog ImmunoLogic and is reposted here with permission. Any reposting should credit both the GLP and the original article.