But innovators are pushing the human-machine boundary even further. While prosthetic limbs are tied in with a person’s nervous system, future blends of biology and technology may be seen in computers that are wired into our brains.

Our ability to technologically enhance our physical capabilities—the “hardware” of our human systems, you could say—will likely reshape our social world. Will these changes bring new forms of dominance and exploitation? Will unaltered humans be subjected to a permanent underclass or left behind altogether? And what will it mean to be human—or will some of us be more than human?

Initial answers may be closer than we think.

Physicist Max Tegmark, MIT professor and president of the Future of Life Institute, considers the recent advances in artificial intelligence and technology through an evolutionary lens to imagine us as “more than human.” He categorizes all life into three levels. In his view, the vast majority of life—from bacteria to mice, iguanas to lobsters—falls into what he calls Life 1.0. These creatures survive and replicate, but they cannot redesign themselves within their lifetime. They evolve and “learn” over many generations.

Moving up, somewhere between Life 1.0 and 2.0, Tegmark classifies animals such as some primates, cetaceans, and corvids that have the ability to intermesh biology and culture. These animals are able to learn complex new skills, like how to use tools. Humans take this to an extreme, and Tegmark categorizes humans as Life 2.0. Through extensive language, social intelligence, and culture, Life 2.0 individuals can jump into new environments independently of genetic constraints. (If you missed it, we wrote about how body modification, as one example, makes us more socially human in part I, “Your Body as a Map,” of this pair of posts.)

Just think about how our ability to learn a new language within our lifetime is a bit like adding a software package to a computer. We can add an infinite number of “self” upgrades during our lifetime and pass our knowledge on to future generations. We also can manipulate other life forms to our own ends on a grand scale—from cattle farming to harnessing bacteria in the preparation of fermented foods like cheese.

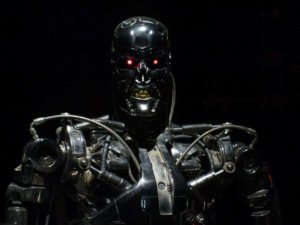

But with the leaps we’re seeing in artificial intelligence, neuroscience, and biotechnology, our concept of “animal” and “human” could compete with the most imaginative Hollywood film. Life 3.0 doesn’t yet exist on Earth, but Tegmark argues that in the future, we will see a technological life-form that can design both its hardware (which neither 1.0 or 2.0 can do) and its software (which currently only 2.0 can do).

Even in the near future, humans may be somewhere in between life-forms 2.0 and 3.0. In 2016, Elon Musk, CEO of Tesla and SpaceX, co-founded Neuralink, a company that aims to develop a brain–computer interface. Musk says his goal is to help human beings merge with software and be in sync with advances in artificial intelligence.

Whether people will volunteer to have a robot insert wires into their brain that are attached to a tiny chip implant remains to be seen. But humans across cultures have embraced a variety of technologies in surprising ways.

Today over 5 billion people have access to mobile phones. By 2025, around 71 percent of the world’s population is expected to be connected. The thought that virtually every aspect of a person’s day might be influenced by a smartphone or something like it once seemed like science fiction. But as the number of “digital natives” grows, our relationship with technology does too.

Some of us readily anthropomorphize our gadgets and give our apps and devices names such as Siri or Alexa. We talk to them, allow them to control our surroundings, finances, shopping, and schedules. Yet many hesitate when it comes to embedding technology in our bodies if we are otherwise physically healthy.

Take, for example, microchips inserted under the skin, which can be used to pay for your shopping as well as a bus ride home. This is little different from a credit card in your back pocket, save for the convenience of not having to remember to take it with you.

Our resistance may be influenced by the “yuck factor” of new or different technologies or cultural shifts. But over time, what we think of as disgusting or offensive may become normalized. Lab-grown meat, for example, has gone from being a scientific and economic fantasy to something that might well be in stores by 2022. Similarly, eating insects, for those unused to the idea in the West, has become more accepted as a sustainable source of protein.

Even if more of us grow to accept the idea of implants, is Life 3.0 a genuine possibility? For now, mind–controlled prosthetics are the closest innovation that hints at a Neuralink-type future. Such prosthetics are still in relatively early stages of development and not universally available. Nonetheless, as far as Musk is concerned, many of us are already cyborgs, with an in–depth digital version of ourselves in the form of social media, email, and much more. His team, or others, may well inch us toward a version of Life 3.0.

Other early signs of how technologically integrated lives might function and impact our individual lives and societies are visible in places such as Scandinavia, where checks and cash are on their way out. In Denmark, for example, the majority of citizens make payments using their mobile phones. The absence of cash has had a direct effect on homeless people. Without smartphones of their own, homeless individuals were unable to receive payments for the newspapers they sold to earn money.

The solution was to provide homeless people with smartphones (and thus mobile payment methods). No longer a luxury, mobile phones became a basic tool vital for anyone engaging in modern society in Denmark.

As soon as we move into the idea of integrated technology as a social essential, we recognize a thorny possibility: a world where a new path to social or class dominance emerges—perhaps a division between those who can and those who cannot afford to interface with technology. It begins to sound like the plot of the 20th-century dystopian novel Brave New World.

In that new world, would the Life 2.0 human without enhancements be relegated to a servile underclass? Perhaps this reflects a false dichotomy. After all, millions of people living in relatively remote regions around the planet have been able to “fast-track” to mobile technology, effectively skipping over earlier versions of the telephone and other communication technologies.

Nonetheless, developers of integrated technologies involving invasive surgery would be wise to consider the social ramifications of their work. Today we can accurately reconstruct the wealth distribution of an entire nation based on individual phone records. Can we predict the negative social impacts of a future Life 3.0? If contemporary clues are any answer, yes, we can. But whether we choose to ameliorate those impacts or not still lies within our control.

Matthew Gwynfryn Thomas is a data scientist and anthropologist working in the nonprofit sector in London, U.K. His current work combines machine learning and social science to address the needs of people in crisis. He has also written popular science articles for a variety of outlets, including BioNews, SciDev.Net, and the Wellcome Trust Blog. Follow him on Twitter @matthewgthomas

Djuke Veldhuis is an anthropologist and science writer based at Monash University in Australia, where she is a course director in the B.Sc. advanced–global challenges degree program. Her Ph.D. research examined the effects of rapid socioeconomic change on the health and well-being of people in Papua New Guinea. She has written for a series of popular science outlets, including SciDev.Net, Asia Research News, and New Scientist. Follow her on Twitter @DjukeVeldhuis

A version of this article was originally published at the Conversation and has been republished here with permission.