This isn’t an uncommon experience, as anyone who follows the latest scientific news will know. Sometimes it feels like we are constantly bombarded with contradictory claims on every possible topic from climate change to cancer treatments. How do we know what’s true and how are we supposed to put recommendations from scientific studies into practice if scientists cannot seem to agree among themselves?

Luckily, scientists have a tool that can not only help sort through large amounts of confusing data but also reveal conclusions that were statistically invisible when the information was first collected. This practice of “meta-analysis” is what helped researchers see there was a problem with neonicotinoids, paving the way for the risk assessment that ultimately led to the ban. In fact, meta-analysis is now so widespread that it affects our lives on a daily basis.

To understand how this process works, we need to know why scientific studies can contradict one another. Initial studies on new and topical subjects make attractive headlines due to their novelty. But these early studies are often small and usually severely overestimate the effects they are assessing. As a result, their conclusions are often overturned by the follow-up studies.

The problem has become worse over the last few decades, as modern technologies have allowed scientists to generate new data much faster than before. This has often resulted in a sequence of extreme, opposite results being published.

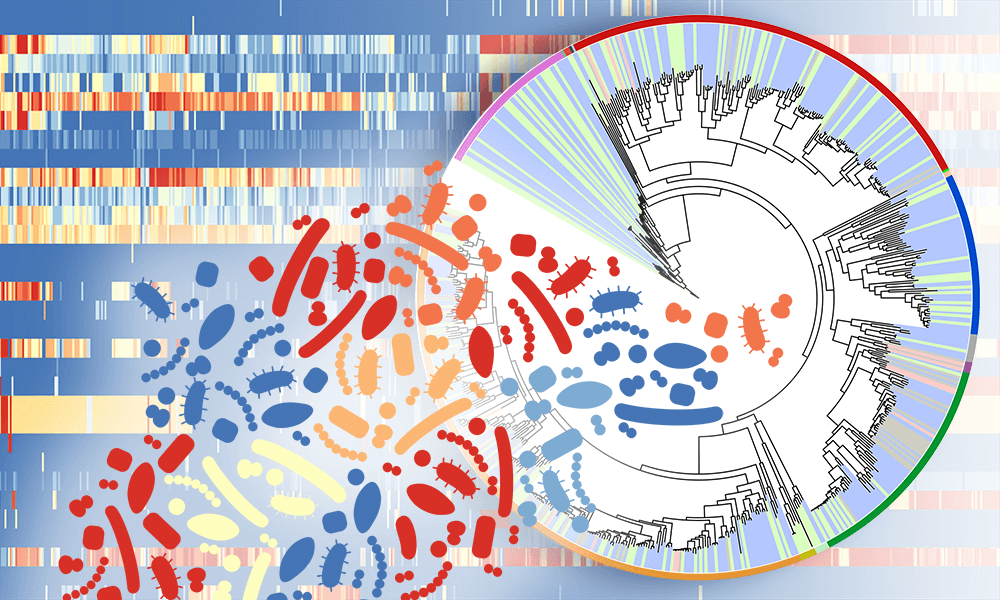

To make sense of such situations, scientists developed a robust statistical approach that involves analysing all available studies on a given topic: meta-analysis. Because all of studies might have been carried out in slightly different ways, their results are first converted into some sort of a common currency so they can be compared.

This common currency is a measurement of how large the effect being studied is (effect size). For example, how much a particular medical treatment increases patients’ odds of survival as compared to placebo or another treatment.

Effect sizes are then statistically combined across the different studies to estimate the overall effect. Larger studies usually contribute more to the overall effect estimate than smaller, less precise studies. We then evaluate whether it is a positive or negative effect and whether it is big enough to be of importance.

The next step in the meta-analysis is to find out how much the effect varies between studies. If the effect varies a lot, then it is important to explore the causes of this variation. For instance, it is likely that the effects of insecticides on pollinators depend on the dose of the chemical. Similarly, the effect of a medical treatment may differ depending on patient’s age and previous medical history.

Making sense of contradictory findings

The results of meta-analyses provide very important information for policy and decision makers and often challenge expert opinions. As I co-wrote in a recent paper in Nature, meta-analysis has helped to establish evidence-based practice and resolve many seemingly contradictory research outcomes in fields from medicine to social sciences, ecology and conservation.

In the case of neonicotinoid research, many studies tested the effects on pollinators but only some of them reported negative effects whereas others found no effect. This initially lead to a conclusion that neonicotinoids posed only a negligible risk to honey bees.

Then, in 2011, a meta-analysis of 14 published studies tested the effects of one neonicotinoid insecticide called imidacloprid. It found that the amount of imidacloprid that honey bees would typically receive in the wild wouldn’t kill them. But it also showed that the insecticide did reduce the bees’ performance (measured in terms of their growth and behaviour).

The meta-analysis also explained why many published trials reported no effects of neonicotinoids on honey bees. It turned out these trials were far too small and lacked the statistical power required to detect effects other than death. Only by statistically combining the results of these small trials in a meta-analysis were the researchers able to detect the effects.

The negative effects of neonicotinoids on pollinators have also been confirmed in another recently published meta-analysis, which combined the results of over 40 published studies on effects of neonicotinoids on beneficial insects. This revealed that these pesticides negatively affect the abundance, behaviour, reproduction and survival of these insects.

Meta-analyses have spread to many fields of research over the last few decades. They are used to compare the effectiveness of different drugs for a particular ailment, and influence other government policies ranging from education to crime prevention.

But this has created an interesting dilemma. Who should be valued and rewarded more: a scientist who conducts an original investigation or the scientist who combines the results of original studies in a meta-analysis? Are meta-analysts just “research parasites” who do not generate data themselves, but use other people’s work to publish high profile papers?

Instead of emphasising the difference between these two types of research, it might be more helpful to view them both as part of the practice of modern science. Both primary researchers and research synthesists are crucial for the scientific progress.

They can be viewed as the brick makers and the brick layers involved in building of an edifice of knowledge. Without meta-analysis, the results of individual studies will remain a pile of bricks of different shapes and sizes, puzzling to look at, but effectively useless for making decisions and policies.

Of course, meta-analyses are also subject to many pitfalls and are only snapshots of scientific evidence at a given point in time. But they provide a safer starting point for drawing conclusions. So the next time you see a news headline that seems to contradict one you read yesterday, remember to check whether the paper on which the story is based is a single study or a meta-analysis based on compilation of results from several dozens of studies.

Julia Koricheva is a professor of ecology at Royal Holloway, University of London. Follow her on Twitter @KorichevaLab

This article was originally published at the Conversation as “Neonicotinoid ban: how meta-analysis helped show pesticides do harm bees” and has been republished here with permission.