…

At Uber, such applications might include driving autonomous cars, setting customer prices, or routing vehicles to passengers. But the team, part of a broad research effort, had no specific uses in mind when doing the work. In part, they merely wanted to challenge what Jeff Clune, another Uber co-author, calls “the modern darlings” of machine learning: algorithms that use something called “gradient descent,”

…

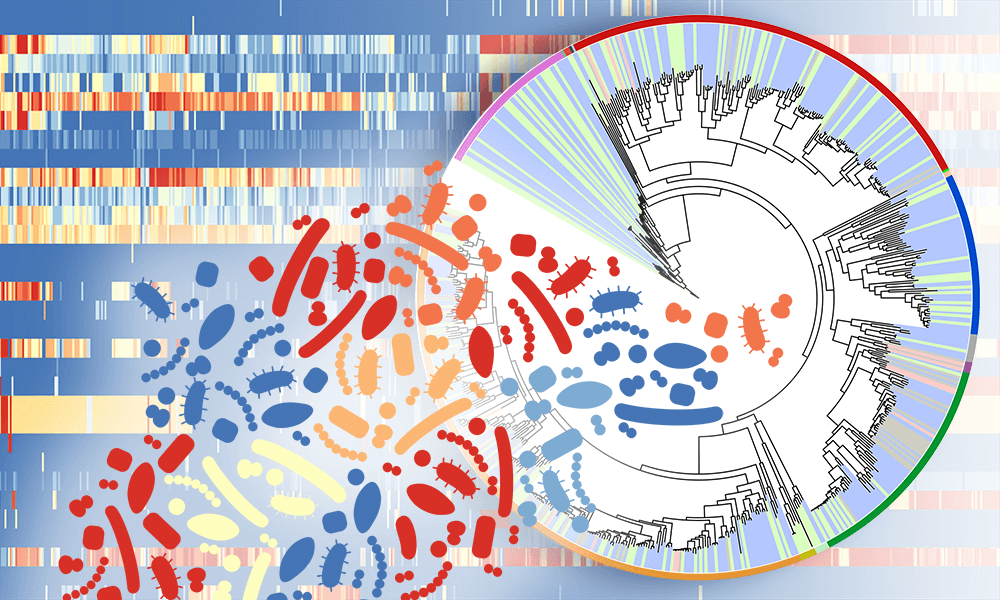

The most novel Uber paper uses a completely different approach that tries many solutions at once. A large collection of randomly programmed neural networks is tested (on, say, an Atari game), and the best are copied, with slight random mutations, replacing the previous generation. The new networks play the game, the best are copied and mutated, and so on for several generations.

…

[G]oing forward, the best solutions might involve hybrids of existing techniques, which each have unique strengths. Evolution is good for finding diverse solutions, and gradient descent is good for refining them.Read full, original post: Artificial intelligence can ‘evolve’ to solve problems