Fake news has certainly become a widespread and insidious problem, and in a year when we’re dealing with both a global pandemic and the possible re-election of Donald Trump as the US president, it seems like a more powerful and lifelike text-generating AI is one of the last things we need right now.

Despite the potential risks, though, OpenAI announced late last month that GPT-2’s successor is complete. It’s called—you guessed it—GPT-3.

…

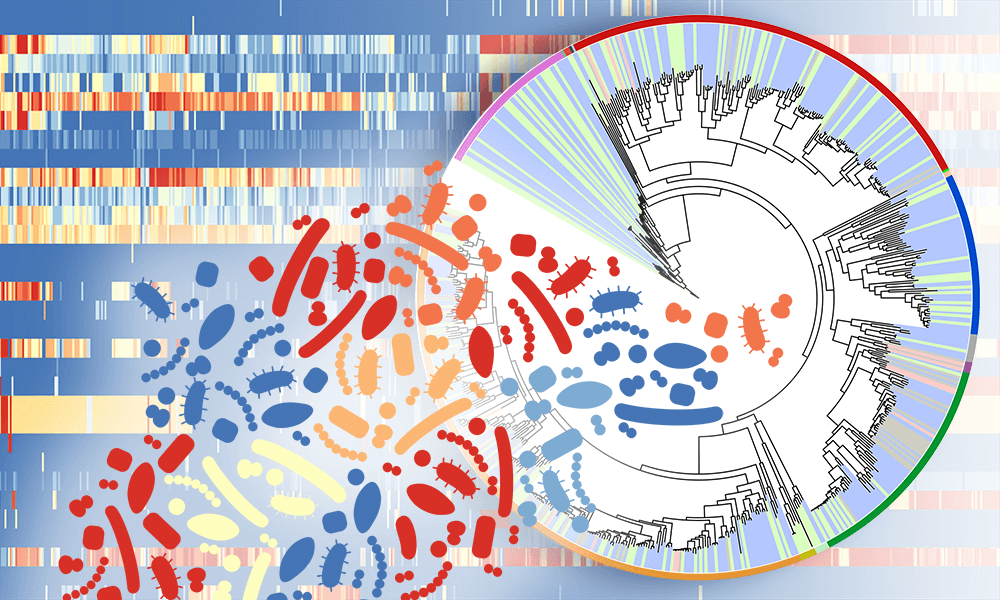

And it doesn’t end with words—GPT-3 can also figure out how concepts relate to each other, and discern context.

In the paper, the OpenAI team notes that GPT-3 performed well when tasked with translation, answering questions, and doing reading comprehension-type exercises that required filling in the blanks where words had been removed. They also say the model was able to do “on-the-fly reasoning,” and that it generated sample news articles 200 to 500 words long that were hard to tell apart from ones written by people.

…

At the beginning of this year, an editor at The Economist gave GPT-2 a list of questions about what 2020 had in store. The algorithm predicted economic turbulence, “major changes in China,” and no re-election for Donald Trump, among other things. It’s a bit frightening to imagine what GPT-3 might predict for 2021 once we input all the articles from 2020.